AN IN-DEPTH LOOK AT 3D SENSING

Coherent is a leading provider of advanced illumination solutions for 3D sensing applications.

The world is three dimensional. That statement is so obvious that most of us never question how we perceive it. But, in fact, each of our eyes captures a flat image – just like a camera. And it’s only in our brains that the magic of forming a 3D perception from those two flat images occurs.

Today, we increasingly ask digital systems to interact with the 3D world – whether it’s to interpret gestural controls, perform facial recognition, or automatically pilot a vehicle. To accomplish these tasks, we need to give them at least some of our ability to perceive depth.

DEPTH SENSING

There are two basic approaches used for 3D (depth) sensing in digital imaging: triangulation and time-of-flight (ToF) measurements. Sometimes these techniques are even combined.

Triangulation is based on geometry. One form of triangulation – binocular vision – is the way that human 3D (stereoscopic) vision operates. We have two eyes separated horizontally. This means that each eye sees the world from a slightly different angle. This difference in perspective creates a parallax, meaning a shift in the position of an object relative to the background depending on which eye you’re looking with. Our brains then use this parallax information to sense the depth (distance) of objects in our field of view and create our single, unified, 3D perception of the world.

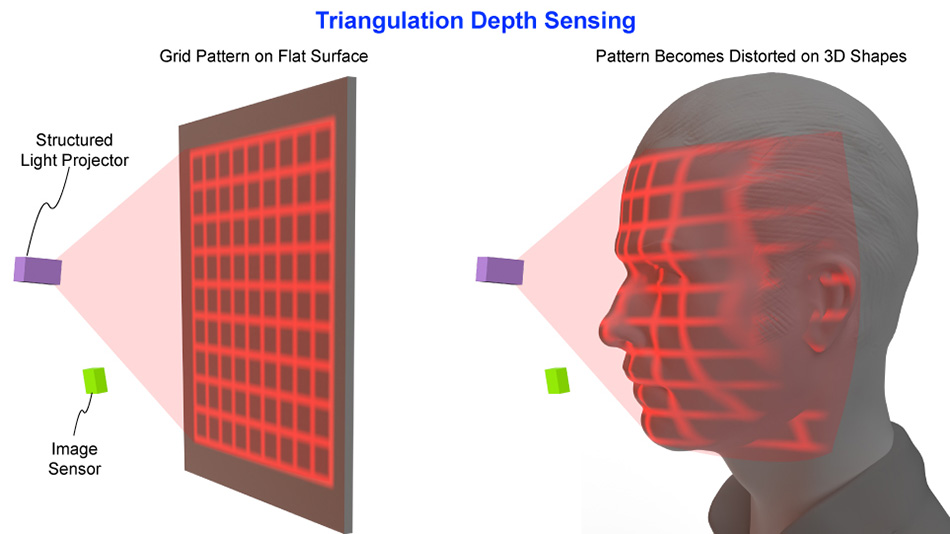

But stereo vision can be dependent on lighting conditions and requires distinct textured surfaces. These make it difficult to reliably implement. Instead, computer vision systems use another form of triangulation that relies on “structured light.” This is just a fancy name for projecting a pattern (like a series of lines or numerous spots of light) onto an object and analyzing the distortion of this pattern from a slightly different angle. This takes much less processing power than recreating true binocular vision, and it enables a computer to rapidly calculate depth information and reconstruct a 3D scene.

In one form of triangulation depth sensing a structured light pattern is projected onto the scene, and an imaging system analyzes the distortion of this pattern to derive depth information for the illuminated area.

Triangulation methods excel in high-resolution mapping of surfaces. They work best over shorter distances, making them very useful for tasks like facial recognition.

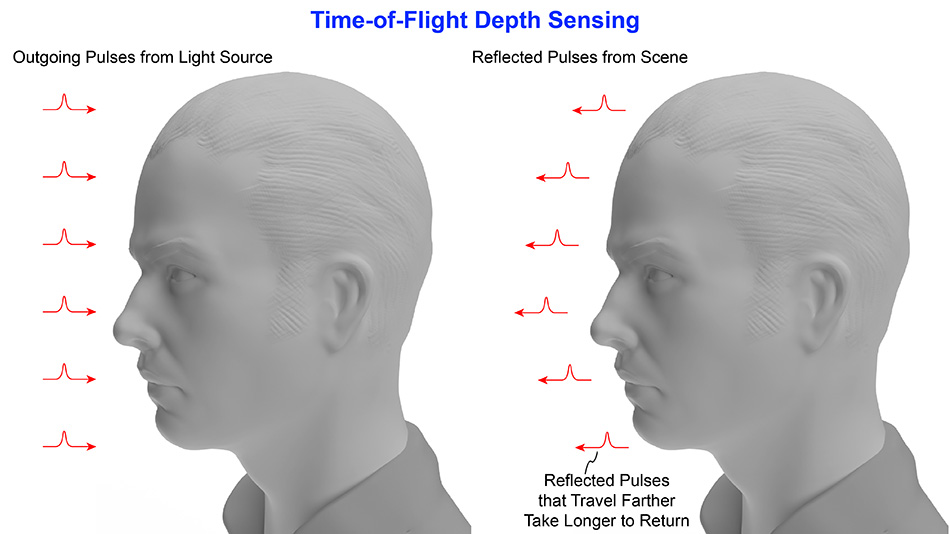

Time-of-Flight imaging (ToF) comes in two different forms. In “direct Time-of-Flight" (dToF) the scene is illuminated with pulses of light, and the system measures the time it takes for the reflected light pulses to return. Since the speed of light is known, this return time can be directly converted into distance. If this calculation is performed independently for each pixel in an image, then a depth value at each point in the scene can be derived.

The second form of ToF is "indirect Time-of-Flight" (iToF). Here, the illumination is a continuous, modulated signal. The system measures the phase shift of this modulation in the returned light. This provides the data used to calculate object distances.

ToF technology shines in its ability to measure over larger areas and distances quickly. This makes it ideal for tasks like room scanning in virtual reality headsets or obstacle detection for robot navigation.

Direct Time-of-Flight sensing measures the round-trip travel time of light pulses and converts the time intervals into distance measurements.

3D SENSING LIGHT SOURCE REQUIREMENTS

The characteristics of the light source are crucial in determining the effectiveness and accuracy of both triangulation and ToF 3D sensing methods. Each application has unique illumination requirements, although they also have certain common needs.

Triangulation benefits from a coherent light source. This provides greater flexibility in terms of the patterns that can be created. It also enables them to form higher-resolution structured patterns and to maintain pattern integrity over longer distances.

A triangulation light source also needs to have stable beam-pointing characteristics. Any fluctuations in these can lead to inaccurate depth measurements.

ToF systems require a light source capable of emitting either short, precise pulses of light (dToF) or continuous output that can be modulated at high frequencies (iToF). The precision in pulse timing and modulation frequency with short rise and fall times is paramount for accurate distance measurement.

ToF systems, especially those using flood illumination to cover large areas or long distances, generally require higher output powers than triangulation systems. This ensures that the return light will have sufficient intensity to be detected, and that the system will function well with high levels of ambient light.

As output power scales up, the need for power efficiency (the ratio of optical output power to input electrical power) becomes more important. Efficiency becomes particularly relevant for portable (battery operated) devices.